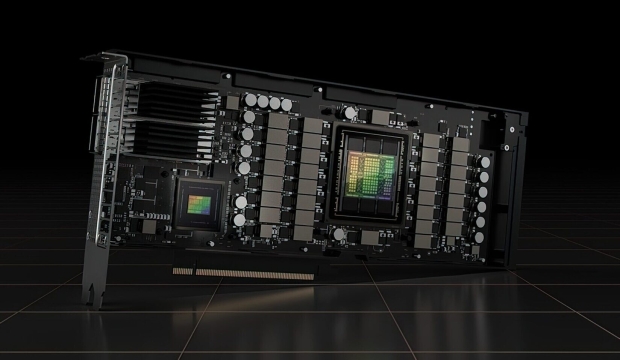

NVIDIA Hopper H100 GPU with 120GB HBM2e (or HBM3) memory teased

NVIDIA's new high-end Hopper H100 GPU with 80GB HBM2e memory could soon be joined by a beefier Hopper H100 GPU with 120GB of HBM2e memory.

NVIDIA might have just announced its next-gen Ada Lovelace GPU architecture, while the Hopper GPU architecture lives alongside it in the data center... and now there's a new Hopper H100 GPU with 120GB of HBM2e (or HBM3, it's not clear from the picture) memory floating around in the world.

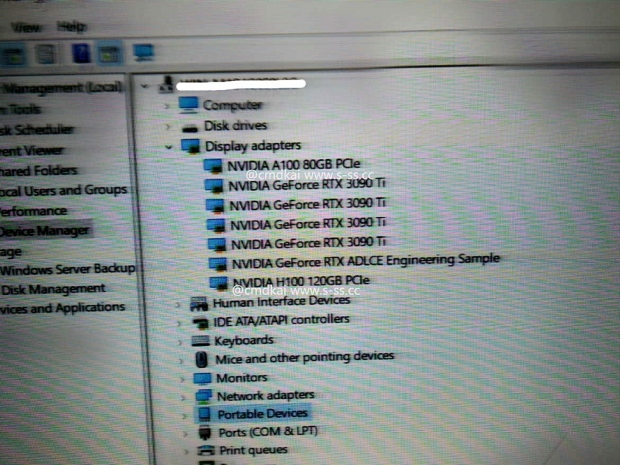

A new PCIe-based graphics card called the "NVIDIA H100 120GB PCIe" has surfaced, looking like it should be the beefed-up NVIDIA Hopper H100 GPU with 120GB of HBM2e/HBM3 memory. NVIDIA makes a few different SKUs of its Hopper H100 GPU, with SXM and PCIe models but the SXM variant rocks higher-end HBM3 memory.

The model teased here in the screenshot reportedly has 120GB of HBM memory (HBM2e or HBM3 it's not clear) which is a sign of things to come for NVIDIA customers. For now, 80GB of memory is "all" you get... with 120GB variants in PCIe form at least, launching in the coming months it seems.

What's new here is that NVIDIA's new Hopper H100 GPU features 80GB of HBM3 or HBM2e memory, but no 120GB variant was released. In its full implementation, the H100 GPU can feature 6 HBM3 or HBM2e stacks for up to 120GB of memory on a 6144-bit memory bus that will spit out an insane 3TB/sec+ memory bandwidth. The SXM5-based NVIDIA Hopper H100 GPU has 80GB HBM3 memory maximum through 5 HBM3 stacks across a 5120-bit memory bus.

Another interesting thing is that whoever sent this screenshot has an incredible amount of GPU silicon in their system: NVIDIA A100 80GB PCIe, 4 x NVIDIA GeForce RTX 3090 Ti graphics cards, an "NVIDIA GeForce RTX ADLCE Engineering Sample" which is obviously an Ada Lovelace ES GPU, and the star of the show: the NVIDIA H100 120GB PCIe card.

The pre-production unit of the Ada Lovelace graphics card features a lowered TDP, limited to 350W (the final specs of the RTX 4090 are up to 450W default TDP).

The full implementation of the GH100 GPU includes the following units:

- 8 GPCs, 72 TPCs (9 TPCs/GPC), 2 SMs/TPC, 144 SMs per full GPU

- 128 FP32 CUDA Cores per SM, 18432 FP32 CUDA Cores per full GPU

- 4 Fourth-Generation Tensor Cores per SM, 576 per full GPU

- 6 HBM3 or HBM2e stacks, 12 512-bit Memory Controllers

- 60 MB L2 Cache

- Fourth-Generation NVLink and PCIe Gen 5

As you can see, the full implementation specs of NVIDIA's Hopper H100 GPU are monstrous. There's just so much crammed into it, as well as 60MB of L2 cache and 4th Gen NVLink and PCIe 5.0 support here, too.